Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

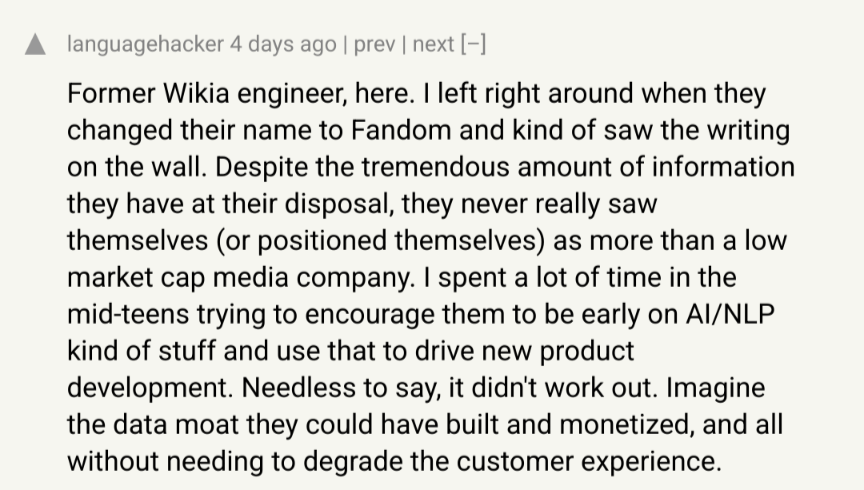

lol fandom could have been even worse

data moat

That’s just the kind of innovation we need to get over this primitive and outdated impulse to cooperate with one another.

ok my first thought was to make a joke about castle warfare, despite my knowledge set being ephemera from a childhood appreciating tech trees in video games. So I did some research:

- The etymology of “moat” is that it comes from the word “motte”. I will not elaborate.

- Moats were effective against early forms of siege warfare, like battering rams, siege towers, and mining out the foundations of a castle’s defences, or anything that required approaching the castle directly

- Moats were made somewhat obsolete by siege artillery, which did not need to be in the direct vicinity of the castle

Err so yeah. Make your own jokes, ig.

Anyway, this has been MoatFacts™️. Paging @skillissuer@discuss.tchncs.de for better commentary*

In this context, “moat” is a cargo-cult invocation of Warren Buffett and Benjamin Graham. Just another square on the hackernews bingo

idk what to exactly put there, moat is still an obstacle even in modern context, but assault on a castle with a moat using modern weaponry would be hilariously one-sided. you can suppress defenders with something, use a bridge layer to get inside the moat, then let combat engineers do their shenanigans to “open” castle one way or another. or you can use helis to do the same, or you can just level it all with artillery or airstrike or maybe even loads of ATGMs

that said it’s not completely useless. moats but dry were used as a part of fixed fortifications in ww1 quite successfully. freshly invented electrified barbed wire fence and machine guns made them quite hard to pass, especially if you are, say, a peasant from tula oblast born in 1898 that has never seen powerline before. i think the last proper moat use in large-scale warfare happened during iran-iraq war, in battle of the marshes, when iraqis flooded previously dry area known as fish lake and put underwater coils of barbed wire and high-voltage cables. defensive tactic used there was to shoot at assaulting iranians to make them abandon or fall out of their boats or amphibious vehicles, then when they were in the water high voltage lines were energized. iranians eventually crossed the marshes entirely using speedboats. maybe it’s not that outdated considering that last recored bayonet charge happened in 2004 (by brits in iraq). ymmv

I will internalise this for the next time data moats come up!

iirc they had tools to import data from other wikis into theirs, but not tools to export.

they have the MediaWiki database dumps, which are XML so you can do anything with them!! *

* the actual page text is a single field

imagine how they could have monetized it

Surely Wikia could have catapulted to the upper echelons of the Fortune 500 if they had just moved faster to gatekeep the facts about gender-swapped Lady Vegeta being a rare card in set 27 of the Dragonball gacha game

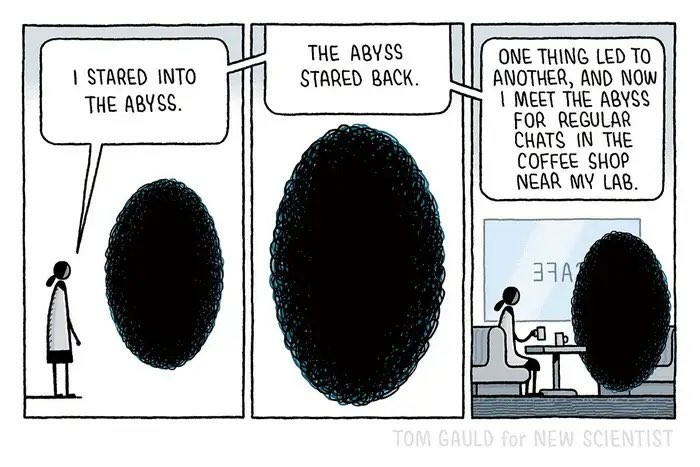

fig. 1: how awful.systems works

In other news, a lengthy report about Richard Stallman liking kids just dropped.

Hacker News has a thread on it. Its a dumpster fire, as expected.

Jesus GNU Christ, Live your life so that no one ever produces a systematic classification of your opinions that looks like this

Ted_Danson_choosing_between_clam_chowder_fountain_and_bees_with_teeth.webm

Little of this was news to me, but damn, laid out systematically like that, it’s even more damning than I expected. And the stuff that was new to me certainly didn’t help.

Very serious people at HN at it again:

The only argument I find here against it is the question of whether someone’s personal opinions should be a reason to be removed from a leadership position.

Yes, of course they should be! Opinions are essential to the job of a leader. If the opinions you express as a leader include things like “sexual harassment is not a real crime” or “we shouldn’t give our employees raises because otherwise they’ll soon demand infinite pay” or “there’s no problem in adults having sex with 14 year olds and me saying that isn’t going to damage the reputation of the organization I lead” you’re a terrible leader and and embarrassment of a spokesman.

Edit: The link submitted by the editors is [flagged] [dead]. Of course.

The only argument I find here against it is the question of whether someone’s personal opinions should be a reason to be removed from a leadership position.

What do these people think leadership is?

No, obviously opinions like

- “if my MIT AI Lab mentor had sex with an underage sex worker on Epstein’s teen rape island, that was only because he thought she consented”,

- “stealing a kiss from a woman is fine and not a sexual assault, maybe perhaps at most it’s supposedly sexual harassment which is not real and is actually fine”,

- “I don’t believe in bereavement leave. What if all your close friends and family die one after another? It’s conceivable you would be gone from the office for days, or weeks, if not months.1 What if you lie about who is dying?”,

- “Overtly sexualizing ‘parody’ ceremonies for a semi-fictitious church of Emacs centering around unprepared girls and women in my audience are fine and when people participate in them, there is certainly no peer pressure involved, not that I care if there is”,

- “It’s fine to throw a tantrum about Emacs supporting another compiler infrastructure Not Invented Here. LLVM/Clang is supported by Apple and has a permissive license instead of GPL so it’s basically proprietary, right?”,

- “

You may have heard or read critical statements about me; <a href=https://website.made.by.my.sychophants.example.com>please make up your own mind.</a>”,

are in the same category as “I think pineapple on pizza is delicious/disgusting” when it comes to evaluating someone’s aptitude as a leader.

I advocate for Free Software despite RMS. I recognize the value of his good contributions and that I might not even have the concept of Free Software and its value without him. I don’t want to throw the baby out with the bathwater, and the editors of the report make it clear that neither do they. I think Stallman is an embarrassment and a liability for the Free Software movement. I respect his moral integrity on software freedom and some other political causes (including his clumsy, yet justified condemnations of police brutality, and boycott of Coca-Cola company due to their use of fascist death squads to suppress Colombian trade unions), but his awful takes on issues of basic respect and empathy toward women, suspiciously fervent wilingness to defend sexual relations between teenage minors and adults, and a number of other gaffes (both ones listed in the report and some that are less morally detestable, but still embarrassing) are still bad enough that I’d be willing to elect an inanimate carbon rod as the leader of the movement before him.

1: It’s conceivable that Richard Matthew Stallman has a secret humiliation fetish he indulges in by installing Oracle products on his secret Windows 11 computer while drinking Coca-Cola. I do not wish to imply that Richard Matthew Stallman has a secret humiliation fetish he indulges in by installing Oracle products on his secret Windows 11 computer while drinking Coca-Cola, but I will simply point out it’s conceivable that Richard Matthew Stallman has such a secret humiliation fetish involving the aforementioned details, and that I have conceived such a scenario simply to prove it is conceivable, that (etc.).

Especially leadership of a political organization that’s basically just there to turn his opinions into code and publish his essays.

Something to which they, and people like them, are entitled

I had heard some vague stuff about this, but had no idea it was this bad. Also, I didn’t know how much of a fool RMS was. : “RMS did not believe in providing raises — prior cost of living adjustments were a battle and not annual. RMS believed that if a precedent was created for increasing wages, the logical conclusion would be that employees would be paid infinity dollars and the FSF would go bankrupt.” (It gets worse btw).

Top level comment at time of posting:

“This might not look that bad, but consider the post-USSR…”

???

No need for these soviet level mental gymnastics. You can just say he needs to be removed permanently.

the lobste.rs thread is a trash fire too.

of note is that the Stallman defenders from about 3 years back (when he waded in unprompted in a mailing list meant for undergrads at MIT and was pretty damn sure that Marvin Minsky never had sex with one of Epstein’s victims, and if he did, it would have been because he was sure she wasn’t underage) have registered https://stallman-report.com which redirects to their lengthy apologia. Could be worth taking into account fi you want to spread the original around

I don’t think anything in the report is new, is it? Isn’t this the exact weirdness that got him kicked off the board in the first place? I was shocked when he was quietly added back to the board; I really thought the allegations would stick the first time.

Nice to have it all in one place though.

There’s a little bit of new stuff in there, but it’s all just corroborating the old or relatively minor. Still, it’s a lot in one place.

(Rationally) “If there’s grass on the field play ball”

that person’s inability to spell millennia makes me irrationally angry, and the rest of it is even fucking worse

okay, I’ve changed my mind, I’m in favour of spacex now. so that we can cheaply airlock these fucking pieces of shit.

🎶 Tryna strike a chord and its probably A minorrrrrrrrrrr

(seriously, what the fuck HN)

Ignorance is a choice. That thread is full of bad choices.

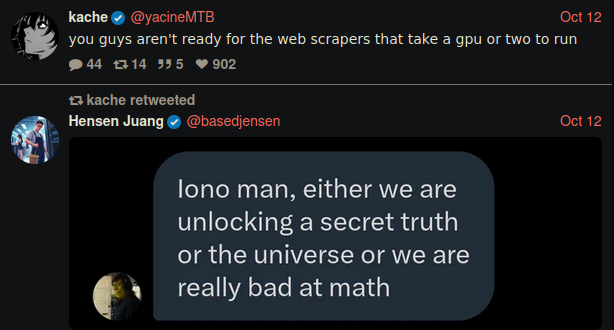

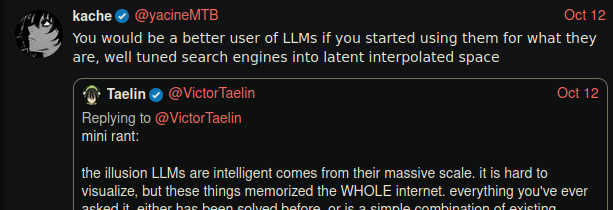

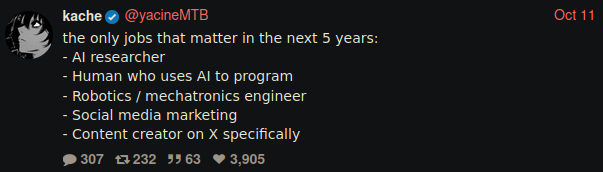

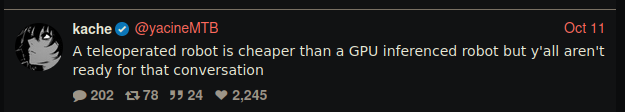

Musk’s twitter is unleashin/g/ the worst posters that the CS world has to offer

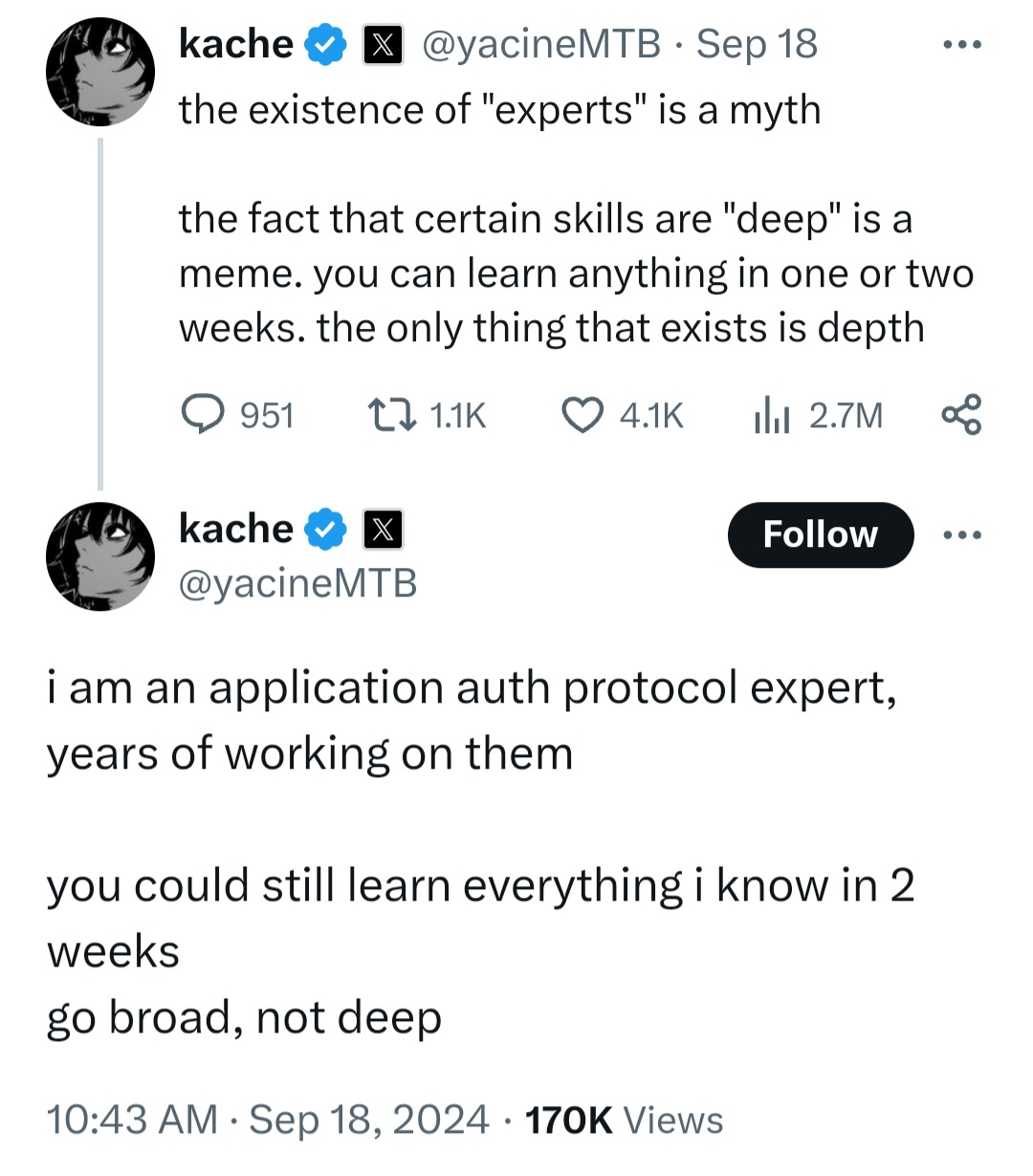

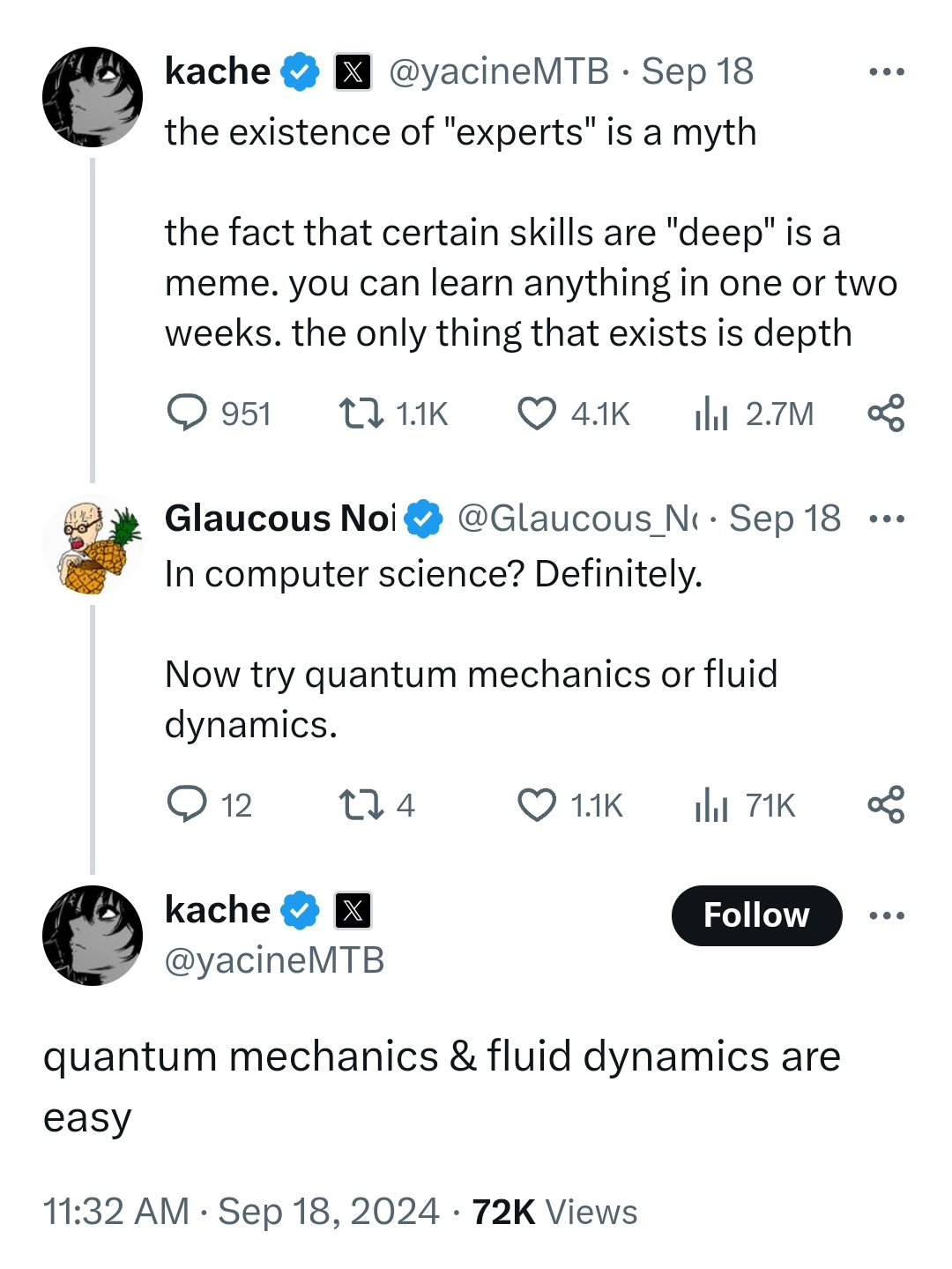

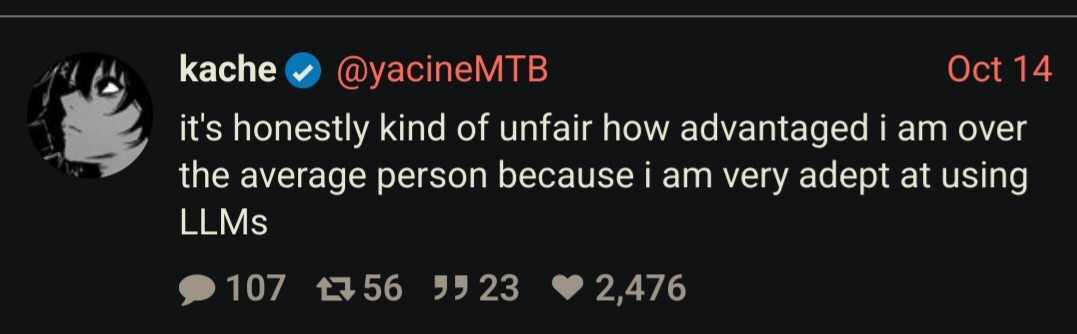

the raw, mediocre teenage energy of assuming you can pick up any subject in 2 weeks because you’ve never engaged with a subject more complex than playing a video game and you self-rate your skill level as far higher than it actually is (and the sad part is, the person posting this probably isn’t a teenager, they just never grew out of their own bullshit)

given how oddly specific “application auth protocol” is, bets on this person doing at best minor contributions to someone else’s OAuth library they insist on using everywhere? and when they’re asked to use a more appropriate auth implementation for the situation or to work on something deeper than the surface-level API, their knowledge immediately ends

have implemented jwt (used the library, first in the company)

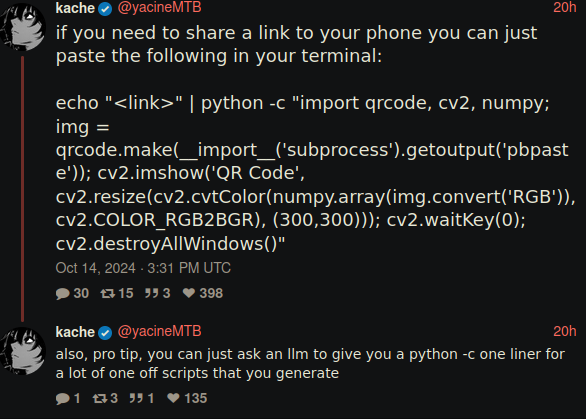

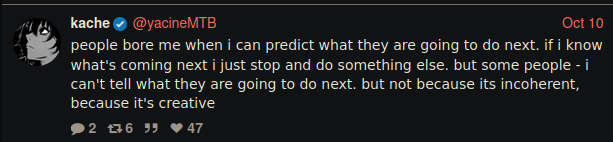

so uh, they keep self-fellating on Twitter about how they invented their own CAD program over the objections of the haters

here it is, it’s an extremely thin wrapper around the typescript version of manifold with live reloading on changes. note that not only is manifold already a CAD library, they already have a web-based editor that reloads the model on code changes, and kache’s live reloading is just

nodemon. the server part looks like it’s barely modified from a code example. the renderer is just three.js grabbed from a CDN.it’s so weird they didn’t take the necessary 2 weeks to learn how to write the CAD parts of the CAD system they made!

hackers and builders (both in the a16z definition of) are some of the fucking worst things out there today

builder (derogatory)

founder mode (derogatory)

founder mode derogatory? [flagged]

Marc the Builder employs many elite code ninjas who are experts at prompting ChatGPT for npm commands

chatgpt: for people who don’t want to be gaslit only by bad cli tools

the absolute worst type of coworker from my cubicle days: heard about a technology at a conference, decided they invented it

Fun fact: The plain vanilla physics major at MIT requires three semesters of quantum mechanics. And that’s not including the quantum topics included in the statistical physics course, or the experiments in the lab course that also depend upon it.

Grad school is another year or so of quantum on top of that, of course.

(MIT OpenCourseWare actually has fairly extensive coverage of all three semesters: 8.04, 8.05 and 8.06. Zwiebach was among the best lecturers in the department back in my day, too.)

I almost want to go Twitter diving to see if kache has the requisite unhinged rant about how universities are only making quantum physics hard to get money/because of woke or whatever

e: holy shit I already regret this

yeah, 3b1b animations can take you through all of undergrad math in probably a month if it all existed and you used anki

We could bottle this arrogance and sell it as an emetic.

And besides, we all know that mathematics videos peaked with the Angle Dance.

as one of many people here who has undergone undergrad math, i reckon a month of youtube and anki might not be enough for even intro to linear algebra. I’m even saying this as someone who skipped all lectures and crammed before all the tests.

oh, cool, they also claim to be a twitter engineer. that’s probably telling too (if true)

i see your twitter engineer and i raise you elite promptfondler

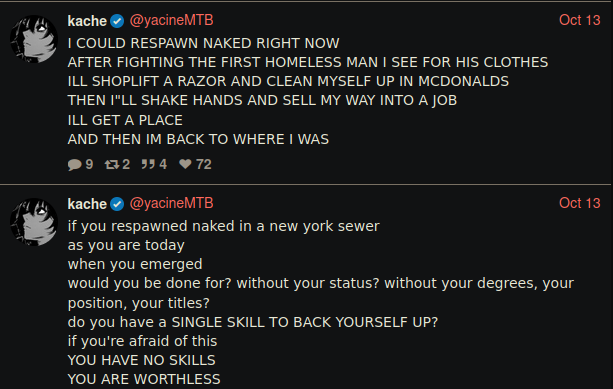

there’s more

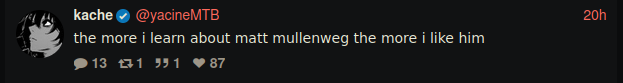

or you could use something like qrencode that already is a thing and pipe it to image viewer of your choice

yeah which might it be

he’s also lying box understander

(idk why these screenshots get stretched to entire width available sometimes and sometimes they don’t)

there’s a lot of this

we had these since manhattan project. sit tf down

i think we have different standards on being coherent

- I don’t know Matt Mullenweg, but I’m afraid to ask

- I love the nonsensical misleading QR code onliner, conflictingly using both

echo "<URL>" |and mac-onlygetoutput("pbpaste")(yuck). - It is famously easy to maintain a job and mental well-being when you have no stable home and few sets of clothes! Famously you don’t need a registered address to open a bank account, and you don’t need a bank account to get a registered address!

- I guess you could run the GPU for 1000 years.

- One needs to learn that interpolation = confabulation = useless bullshit.

or you could use something like qrencode that already is a thing and pipe it to image viewer of your choice

(my bad, I actually just looked at the images earlier, missed that you said that)

finding and looking up information you need effectively is a skill that is both very useful and i don’t think it’s taught explicitly, it’s a byproduct of being taught how to do research more generally. slapping a lying box in its place is not a substitute

but what if the lying box smiled at me and made me feel good about myself, surely that means it’s trustworthy right?

(wipes away a single tear)

Beautiful, man, just beautiful

i stared into abyss again and he can’t grasp why flywheels for energy storage don’t work while trying to make happen a startup that sells hardware

“qm and fluid dynamics are easy” lol

jesus christ

yeah don’t do it to yourself. I forget how I originally noticed this weirdo, it may have been through amolitor99’s continuous anthropology safari of TPOT freaks. Speaking of which, somebody needs to get that guy over here

i have tried occasionally but will try again

dammit now I’m going to have to look at twitter

tpot fresh on my mind, too. just yesterday i was telling someone about how one of the people semi in that cluster had me going 🤨 and then had to explain a little about some of the highlights of tpot

Oh. He retweets Cremieux.

whoa…on second thought, maybe this dude is having a manic episode or something? yeesh!

This person has certainly committed to this philosophy, even to the extent of spending less than one week of thought coming to this very conclusion.

twitter gon’ have nothin’ left but the cranks

Just guys like that and guys like this

kache miss

and hitting all the Ls

I need to look up what auth protocols this guy has worked on so I can stay away from them.

conversely, it might be a rich vein of toctou

Oh I certainly did meet a lot of people employed in auth related stuff that clearly spent only 2 weeks on learning anything about OpenID and I certainly didn’t not hate their guts and wished they were replaced by a small shell script

Today I was looking at buying some stickers to decorate a laptop and such, so I was browsing Redbubble. Looking here and there I found some nice designs and then stumbled upon a really impressive artist portfolio there. Thousands of designs, woah, I thought, it must have been so much work to put that together!

Then it dawned on me. For a while I had completely forgotten that we live in the age of AI slop… blissfull ignorance! But then I noticed the common elements in many of the designs… noticed how everything is surrounded by little dots or stars or other design trinkets. Such a typical AI slop thing, because somehow these “AI” generators can’t leave any whitespace, they must fill every square millimeter with something. Of course I don’t know for sure, and maybe I’m doing an actual artist injustice with my assumption, but this sure looked like Gen-AI stuff…

Anyway, I scrapped my order for now while I reconsider how to approach this. My brain still associates sites like redbubble or etsy with “art things made by actual humans”, but I guess that certainty is outdated now.

This sucks so much. I don’t want to pay for AI slop based on stolen human-created art - I want to pay the actual artists. But now I can never know… How can trust be restored?

I’ve taken to calling the constant background sprinkles and unnecessary fine detail in gen ai images “greebles” after the modelling and cgi term. Not sure if they have a better or more commonplace name.

It’s funny, meaningless bullshit diagrams on whiteboards backgrounds of photos were a sure sign on PR shots or lazy set dressing, and now they’re everywhere signifying pretty much the same thing.

Sadly I think the only way to trust you are not getting a lot of AI art is by starting to follow a lot of artists you like on social media. Just going to a site which sells things seems a bit risky atm.

As more and more browsers are enshittifying, this is a small reminder that Brave is not a great alternative.

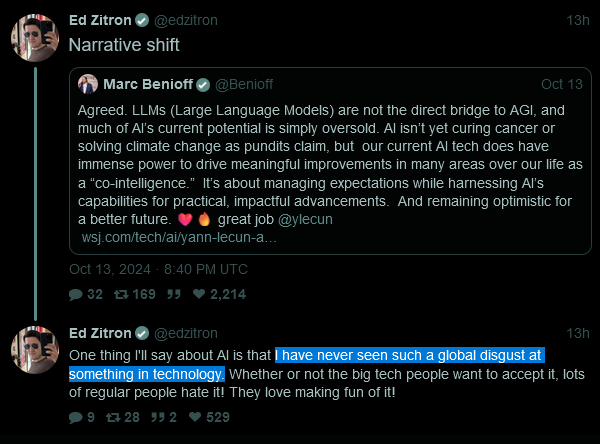

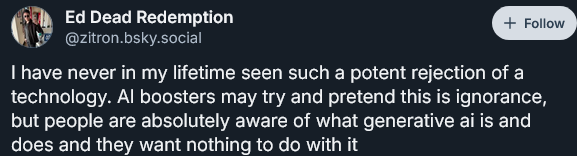

New pair of Tweets from Zitron just dropped:

I also put out a lengthy post about AI’s future on MoreWrite - go and read it, its pretty cool

Boo! Hiss! Bring Saltman back out! I want unhinged conspiracy theories, damnit.

It feels like this is supposed to be the entrenchment, right? Like, the AGI narrative got these companies and products out into the world and into the public consciousness by promising revolutionary change, and now this fallback position is where we start treating the things that have changed (for the worse) as fair accompli and stop whining. But as Ed says, I don’t think the technology itself is capable of sustaining even that bar.

Like, for all that social media helped usher in surveillance capitalism and other postmodern psychoses, it did so largely by providing a valuable platform for people to connect in new ways, even if those ways are ultimately limited and come with a lot of external costs. Uber came into being because providing an app-based interface and a new coat of paint on the taxi industry hit on a legitimate market. I don’t think I could have told you how to get a cab in the city I grew up in before Uber, but it’s often the most convenient way to get somewhere in that particular hell of suburban sprawl unless you want to drive yourself. And of course it did so by introducing an economic model that exploits the absolute shit out of basically everyone involved.

In both cases, the thing that people didn’t like was external or secondary to the thing people did like. But with LLMs, it seems like the thing people most dislike is also the main output of the system. People don’t like AI art, they don’t like interacting with chatbots in basically anywhere, and the confabulation problems undercut their utility for anything where correlation to the real world actually matters, leaving them somewhere between hilariously and dangerously inept at many of the functions they’re still being pitched for.

Like Vitalik Buterin creating eth because he was mad his op WoW char got nerfed, we now have more gamers lore. J D Vance played a Yawgmoth’s Bargain deck.

the harris campaign must get his list. if he ran only three dark rituals the election is over

We cannot allow a dark ritual gap.

“O Cent O Pence (R)” is an anagram for “Necropotence”

Trump is clearly campaigning on the critically overlooked black draw engine platform, possibly to spite blue voters.

Edit: “One Percent Co.” was right there! It’s all coming together now!

brb, ran out of red cord.

Molly White reports on Kamala Harris’s recent remarks about Cryptocurrency being a cool opportunity for black men.

VP Harris’s press release (someone remind me to archive this once internet archive is up). Most of the rest of it is reasonable, but it paints cryptocurrency in a cautiously positive light.

Supporting a regulatory framework for cryptocurrency and other digital assets so Black men who invest in and own these assets are protected

[…]

Enabling Black men who hold digital assets to benefit from financial innovation.

More than 20% of Black Americans own or have owned cryptocurrency assets. Vice President Harris appreciates the ways in which new technologies can broaden access to banking and financial services. She will make sure owners of and investors in digital assets benefit from a regulatory framework so that Black men and others who participate in this market are protected.

Overall there has been a lot of cryptocurrency money in this US election on both sides of the aisle, which Molly White has also reported extensively on. I kind of hate it.

“regulation” here is left (deliberately) vague. Regulation should start with calling out all the scammers, shutting down cryptocurrency ATMs, prohibiting noise pollution, and going from there; but we clearly don’t live in a sensible world.

Introducing the official crypto coin of the Harris-Walz ticket: JoyCoin! Trading under JOY. Every time a coin is minted, we shoot someone from the global south in the head.

who tf in fourth year of our lord covid puts money on fire in crypto

Me, a nuclear engineer reading about “Google restarting six nuclear power plants”

lol, lmao even

Future headline: “Google quietly shuts down six nuclear power plants”

Zitron’s given commentary on PC Gamer’s publicly pilloried pro-autoplag piece:

He’s also just dropped a thorough teardown of the tech press for their role in enabling Silicon Valley’s worst excesses. I don’t have a fitting Kendrick Lamar reference for this, but I do know a good companion piece: Devs and the Culture of Tech, which goes into the systemic flaws in tech culture which enable this shit.

Over on /r/politics, there are several users clamoring for someone to feed the 1900 page independent counsel report into an LLM, which is an interesting instance of second-order laziness.

They also seem convinced that NotebookLLM is incapable of confabulation which is hilarious and sad. Could it be sneaky advertising?

apparently those qualcomm NPUs (the “AI assist” chips in the copilot(?) laptops) aren’t very good

The what in the what now?

What a terrible day to tech.

(yes this post is a creative attempt at generating alt text for my image).

Thus leading to this sneer on HN. I’m quoting it in entirety; click through for Poe’s Law responses.

I was telling someone this and they gave me link to a laptop with higher battery life and better performance than my own, but I kept explaining to them that the feature I cared most about was die size. They couldn’t understand it so I just had to leave them alone. Non-technical people don’t get it. Die size is what I care about. It’s a critical feature and so many mainstream companies are missing out on my money because they won’t optimize die size. Disgusting.

gonna have to ship this person a 20d100 kit. maybe custom woodgrain? show some thought, y’know

wtf are NPUs anyway? some specialised vector maths thing?

non processing units

inference-tasks focused coprocessor

cope rocessor

hang on I have another.

NPU? More like, N-Pee-eeeww!!

God, I hope this is a scam, and that whoever is running it is just smashing together today’s buzzwords to print money.

This 180$ ebook better be completely autoplagged and in no way intended to be informational.

the upside of it listing a pile of author names: one can go look up their published works, and add them to crank trackers if necessary (seems likely)

the ToC is some fantastical fucking nonsense

this is one hell of a hat trick

only needs a quantum chapter somewhere in there for bonus scoring round…

I bear news from the other place!

https://www.reddit.com/r/australia/comments/1g3zt5b/hsc_english_exam_using_ai_images/

Post content reproduced here:

autoplag image of some electronics on a table

hello, as a year 12 student who just did the first english exam, i was genuinely baffled seeing one of the stimulus texts u have to analyse is an AI IMAGE. my friend found the image of it online, but that’s what it looked like

for a subject which tells u to “analyse the deeper meaning”, “analyse the composer’s intent”, “appreciate aesthetic and intellectual value” having an AI image in which you physically can’t analyse anything deeper than what it suggests, it’s just extremely ironic 😭 idk, [as an artist who DOESNT use AI]* i might have a different take on this since i’m an artist, what r ur thoughts?

*NB: original post contains the text: “as an artist using AI images” but this was corrected in a later comment:

also i didn’t read over this after typing it out but, meant to say, “as an artist who DOESNT use AI”

In a twisted way, this makes sense as an exercise for English class. Why would someone go to an autoplag image generator, type in a prompt (perhaps something like “laptop and smartphones on a table at a lakefront”) and save this image. It’s a question I can’t easily answer myself. It’s hard to imagine the intention behind wanting to synthesize this particular picture, but it’s probably something we’ll be asking often in the near future.

I can even understand the shrimp Jesus slop or soldiers with huge bibles stuff to an extent. I can understand what the intended emotional appeal is and at least feel something like bewilderment or amusement about the surreality of them. This one would be just banal even if it were a real photo, so why make this? The AI didn’t have intent or imbue meaning in the image but surely someone did.